Introduction

This post continues documenting the process of optimizing a small problem - generating images of the Mandelbrot Set. This builds on what I wrote in part 1 and gets to speeds of up to 130x the naive case. Again, this is mostly meant as an exercise to demonstrate how I go about using my knowledge of software design and computer architecture to optimize software.

This post will be divided into four sections: a brief recap and some notes on the feedback I got from the last post, a section on micro-optimization where I get a small (~1.2x) extra improvement, a section on point pruning where contour following gives a significant improvement, and finally some notes on multi-threading. Again, many of the sections are independent of each other, so feel free to use the Contents to go to the sections of interest.

Most of this code can be found on GitHub (still undergoing a little cleanup).

Contents

- Introduction

- Contents

- Recap and feedback notes

- Micro-optimization

- -ffast-math revisited

- Instruction substitution: floating point comparison

- Optimizing where it counts

- Code de-duplicating

- The Critical Path

- Instruction substitution: reduction in strength of calculating \(2x\)

- Instruction substitution: making more use of multiply-add and multiply-subtract

- Not checking the condition every iteration

- Best code so far

- Cost of looking back

- Point pruning

- Multi-threading

- Conclusion

Recap and feedback notes

This builds heavily on part 1, so that is definitely worth reading first, but to summarize:

I wrote a naive version of a Mandelbrot Set generator, set up some tests, then used various techniques (compiler flags, sheep race, software pipelining, vectorizing) to speed this up by about a factor of 8.

The tests are:

Zoom is set at the effective maximum of 8589934592000 and max iterations of 50000, to use in testing, the first three (A,B,C) to represent the sort of workloads that might typically be found zooming in, and the fourth (D) to represent the slowest possible example.

| test | \(c_x\) | \(c_y\) | black | detail |

|---|---|---|---|---|

| A | -0.57245092932760 | 0.563219321276942 | lots | lots |

| B | -0.57245092932763 | 0.563219321276842 | little | lots |

| C | -0.57245092932663 | 0.563219321276852 | little | little |

| D | 0 | 0 | all | none |

Test A looks like this (the others are shown in part 1):

The approximately 8x speedup was given with a version called “render2_avx_sheeprace”

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| naive | 973.4 | 68.7 | 41.2 | 3550.7 |

| render2_avx_sheeprace | 119.7 | 8.8 | 5.4 | 436.8 |

Feedback and amendments from part 1

Cunningham’s Law states: “The best way to get the right answer on the internet is not to ask a question; it’s to post the wrong answer.” I suspect there is a corollary to that, where the best way to get optimized code is to post code that is not quite optimized. It’s a good reminder that one person can’t think of everything.

-

Boudewijn has provided a lot of useful suggestions and code and is a large contributor to the section on micro-optimization, particularly some instruction substitutions.

-

thanatos519 suggested checking for the main cardioid and circle first. That is a very low overhead optimization, but I’m going to leave that out, because most deep zooms won’t intersect with those - the purpose of test D was to provide a way of measuring the raw loop speed. Edge/contour following will also deal with any large black areas.

-

What I called “interleaving” is more properly called “software pipelining”. Part 1 has been amended to reflect that.

-

Although my processor reports that it is 2.9 GHz, it runs at 3192.7 MHz under load. This has been amended in the conclusion of part 1.

-

To those who asked for an RSS link - I still haven’t managed to get that working. My optimization skills are way ahead of my website design skills.

Micro-optimization

TL;DR - in this section, I get to try a lot of stuff, it gets a bit messy with all the different options (as is always the case of trying to optimize something already fast) and I get some minor speed gains. The main lesson from this section is that it it possible to keep optimizing, but it becomes more and more effort for less and less reward. The real speed gains happen in the next section on Point Pruning.

In part one, I looked at large optimizations like sheep race, software pipelining and vectorizing. It is usually also possible to get some speed improvements by considering instruction selection, choice of where to inline functions and compiler hints - these are micro-optimizations. There is always a lot of trial and error here - there are three translation steps where transformation can happen:

-

from the mind of the coder to the code,

-

by the compiler from the code to machine code (only a change in form if using assembler),

-

by the CPU from machine code to the internal μops.

The result of this is that the optimizations will interact in non-obvious ways: optimization X might be faster/slower on its own, but add in optimization Y and optimization X now has the reverse effect and makes things slower/faster.

-ffast-math revisited

When testing out various optimizations, I accidentally had -ffast-math turned off for some of the tests I was running and got some anomalous results. It turns out that for render2_avx_sheeprace, -ffast-math makes things slower. Here are the results without -ffast-math:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render2_avx_sheeprace | 118.5 | 8.7 | 5.4 | 432.5 |

My guess is that as I start to use intrinsics and carefully choose what instructions I am using, I am more informed than the compiler about some of the instruction selection, so -ffast-math just gives the compiler an opportunity to make things worse. -ffast-math gets turned off from now on.

Instruction substitution: floating point comparison

Due to the layout specified by IEEE-754, non-negative floats and doubles can be compared using integer operations. On more modern hardware than I’m using, a 2-3% speedup was achieved by replacing

_mm256_cmp_pd(s*_mag, four_double, _CMP_GT_OS)

by

_mm256_cmpgt_epi64(s*_mag, four_double)

Profiling is an important part of exercise, so I tried this out for myself:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render2_avx_sheeprace (int compare) | 129.4 | 9.4 | 5.8 | 463.5 |

So it’s slower. Why? It is because I’m using an older microprocessor.

Looking at a portion of the Instruction tables: Lists of instruction latencies, throughputs and micro-operation breakdowns for Intel, AMD and VIA CPUs, for the processors Haswell (which I’m using), Broadwell:

| Intrinsic | Ports | Latency | Reciprocal Throughput |

|---|---|---|---|

| _mm256_cmp_pd | p1 | 3 | 1 |

| _mm256_cmpgt_epi64 | p0 | 5 | 1 |

For more modern processors Skylake, SkylakeX, CoffeeLake, CannonLake, IceLake:

| Intrinsic | Ports | Latency | Reciprocal Throughput |

|---|---|---|---|

| _mm256_cmp_pd | p01 | 4 | 0.5 |

| _mm256_cmpgt_epi64 | p5 | 3 | 1 |

Also, when mixing integer and floating point instructions on vector registers, there is something “data bypass delay” that occasionally costs a clock cycle, depending on processor generation.

As a result, _mm256_cmp_pd is likely faster on Broadwell and earlier, _mm256_cmpgt_epi64 is likely faster on Skylake or later.

If this was part of a product I intended to ship, I would have two main choices:

-

Go with the just the Skylake or later version, as it was launched in 2015 so that is in the majority of machines, on the basis of optimizing preferentially for the most recent hardware,

-

Have two versions (or more if there are other places like this) of the loop, and use the

cpuidinstruction to determine which one to run.

I’m using a Haswell processor for this exercise so need to be able to do timings, I don’t want the complexity of the cpuid route but want people to get the benefits of later processors, so I will define some macros:

#ifdef BROADWELL_OR_EARLIER

#define AVX_CMP_GT_4_64(X,Y) _mm256_cmp_pd((X),(Y),_CMP_GT_OS)

#else

#define AVX_CMP_GT_4_64(X,Y) _mm256_cmpgt_epi64((X),(Y))

#endif

then I use -DBROADWELL_OR_EARLIER=1 as a compiler flag.

Optimizing where it counts

The inner loop has an unusual profile:

while(true) {

// Block A: code run a lot

...

if(!s1_count == 0 || s1_mag_squared > 4)) {

// Block B0:

// Code run much less - only for changing a stream point

// AVX versions have a loop in here

...

}

...

if(!sn_count == 0 || sn_mag_squared > 4)) {

// Block Bn:

// Code run much less - only for changing a stream point

// AVX versions have a loop in here

}

// Block C: code run a lot

...

}

For the purposes of optimizing and register spilling, it is much more important that blocks A and C are optimized well and don’t spill registers than it is for blocks B0..Bn. This can be confirmed by profiling.

How do I tell the compiler that the second piece of code should get the registers and optimization attention?

There used to be a register keyword, but that as been removed as of C++-17, and was largely ignored by compilers before that anyway, so that’s not an option.

One thing I tried was to split the get_next_point out into a function using __attribute__((noinline)) (it inlines by default):

bool __attribute__((noinline)) MandelbrotStateEngineSimple2::get_next_point(

double& px, double& py, uint32_t&x, uint32_t& y) {

...

}

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render2_avx_sheeprace (noinline) | 561.5 | 40.1 | 24.2 | 2049.3 |

This is way worse. There are times when it is good to override the compiler’s inlining using __attribute__((noinline)) or inline __attribute__((always_inline)), but that should only be done with profiling, and this is not one of those times.

I experimented with __attribute__((preserve_all)) as well as __attribute__((noinline)) but that added in bugs I couldn’t track down, and there was very little I could find online discussing this.

C++-20 offers hinting using [[unlikely]]:

if(!_mm256_testz_pd(cmp2, cmp2)) [[unlikely]] {

...

}

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render2_avx_sheeprace [[unlikely]] | 123.0 | 9.0 | 5.5 | 448.3 |

So using [[unlikely]] is worse than leaving it out? This highlights two things, firstly to always test if you are going for peak speed, and secondly that the changes made can be unpredictable.

Clang and gcc also have __builtin_expect:

if(__builtin_expect(!_mm256_testz_pd(cmp2, cmp2),0)) {

...

}

This (for this example anyway) produces identical code to the [[unlikely]] case, so I’m going to stick with [[unlikely]] (if using it at all) since it is more standard.

Code de-duplicating

The code for render2_avx_sheeprace is a bit repetitive, and unnecessary repetition is a rich source of bugs, so I want to clean that up.

I replace:

__m256d cmp2 = _mm256_or_pd((__m256d)s1_count, AVX_CMP_GT_4_64(s1_mag, four_double));

if(!_mm256_testz_pd(cmp2, cmp2)) {

...

}

cmp2 = _mm256_or_pd((__m256d)s2_count, AVX_CMP_GT_4_64(s2_mag, four_double));

if(!_mm256_testz_pd(cmp2, cmp2)) {

...

}

by:

template<class M> inline bool test_inline2(M& mse, iterations_t* pp, uint32_t width,

iterations_t iterations, uint32_t* dx, uint32_t* dy,__m256d& cmp2,

__m256d& cx,__m256d& cy,__m256d& x,__m256d& y,

__m256d& x_squared,__m256d& y_squared,__m256i& count) {

if(!_mm256_testz_pd(cmp2, cmp2)) {

...

}

return false;

}

...

__m256d cmp2 = _mm256_or_pd((__m256d)s1_count, AVX_CMP_GT_4_64(s1_mag, four_double));

if(test_inline2(mse,pp,width,iterations,s1_dx,s1_dy,cmp2,s1_cx,s1_cy,s1_x,s1_y,

s1_x_squared,s1_y_squared,s1_count)) {

cleanup(mse);

return;

}

cmp2 = _mm256_or_pd((__m256d)s2_count, AVX_CMP_GT_4_64(s2_mag, four_double));

if(test_inline2(mse,pp,width,iterations,s2_dx,s2_dy,cmp2,s2_cx,s2_cy,s2_x,s2_y,

s2_x_squared,s2_y_squared,s2_count)) {

cleanup(mse);

return;

}

Why does this belong in a section of micro-optimization? Because even with the template being inlined (it will be), this is not exactly the same code - for one thing, different memory locations will be used for some temporary storage. This seems to give the compiler another roll of the dice, and can produce different results which might be better or worse. If I wanted to remove the repetition but produce exactly the same code, I could pass all the memory locations as parameters so that the compiler produces the same code, or just use macros. I’m calling to call that change “test call” in the timing results.

| [[unlikely]] | int compare | test call | Test A | Test B | Test C | Test D |

|---|---|---|---|---|---|---|

| 118.5 | 8.7 | 5.4 | 432.5 | |||

| • | 123.0 | 9.0 | 5.5 | 448.3 | ||

| • | 129.4 | 9.6 | 5.9 | 471.7 | ||

| • | • | 127.4 | 9.4 | 5.6 | 463.5 | |

| • | 167.5 | 12.1 | 7.4 | 609.9 | ||

| • | • | 118.9 | 8.7 | 5.4 | 432.0 |

The Critical Path

A program can’t run faster than what it is limited to by the longest critical path, so my next step is to work out what that is. The sheep race optimization has likely removed the magnitude calculation and comparisons from the critical path, so all that is left is calculating \(x\) and \(y\).

Using Haswell timings to annotate the links, I get this:

There are three paths:

-

from

y=2x*y+cyto itself takes 5 clock cycles, -

from

x=xs-ttoxs=x*xand back takes 8 clock cycles, -

the outer loop takes 19 clock cycles but happens over two iterations, so it effectively 9.5 clock cycles per iteration.

The minimum number of clock cycles for an iteration is 9.5.

Looking at the best case so far for Test D, it took \(422.8\) seconds to run \(50000 \times 1000 \times 1000 \times 20 \times \frac{1}{8}\) iterations on a 2.90 GHz processor (which seems to run at 3192.7 MHz under load). This works out about 11.1 clock cycles per iteration, which is very close to the 9.5 theoretical best. No major further improvements are going to be made without reducing this critical path.

Instruction substitution: reduction in strength of calculating \(2x\)

A suggested instruction substitution is to make use of the IEEE-754 format and perform the \(2x\) by incrementing the exponent by 1 rather than adding \(x\) to itself. So replace:

_mm256_add_pd(sn_x, sn_x)

by

__m256i add_one_exp = _mm256_set1_epi64x(0x10000000000000);

...

(__m256d)_mm256_add_epi64((__m256i)sn_x, add_one_exp)

Looking at the timing for this (on Haswell, although later processors don’t change this much):

| Intrinsic | Ports | Latency | Reciprocal Throughput |

|---|---|---|---|

| _mm256_add_pd | p1 | 3 | 1 |

| _mm256_add_epi64 | p15 | 1 | 0.5 |

However, there is something called a “data bypass delay” to consider.

From Agner Fog’s The microarchitecture of Intel, AMD, and VIA CPUs, page 144: The execution units are divided into domains as described on page 115, and there are sometimes a delay of one clock cycle when the output of an instruction in the integer domain is used as input for an instruction in the floating point domain or vice versa.

sn_x gets moved from the floating point domain to the integer domain for the _mm256_add_epi64, and the result is moved from the integer domain to the floating point domain, so the worst case is 2 extra clock cycles, making it a wash with the floating point add version. This is less likely to be an issue on later processor generations. From the results, I’m going to guess that this instruction takes two clock cycles.

Also note that an extra register or memory read is required to access add_one_exp.

Trying this out:

| [[unlikely]] | test call | add exponent | Test A | Test B | Test C | Test D |

|---|---|---|---|---|---|---|

| • | • | 165.6 | 12.13 | 7.5 | 603.7 | |

| • | • | • | 115.5 | 8.5 | 5.3 | 420.1 |

This is a very small improvement.

Instruction substitution: making more use of multiply-add and multiply-subtract

The code I’ve been using which includes calculating \(x^2\) and \(y^2\) and using them both for the calculation of the next \(x\) and next magnitude\(^2\) uses the smallest amount of floating point compute resources, but doesn’t create the smallest critical path. Making better use of FMA instructions that fuse multiplication and addition into a single instruction (_mm256_fmadd_pd and _mm256_fmsub_pd in this case) can reduce the critical path, at the expense of one or both of \(x^2\) and \(y^2\) having to be calculated twice.

The sequence:

ys = y * y

t = ys - cx

can be replaced by the single instruction:

t = y * y - cx

That takes the critical path down to 15 clock cycles for two iterations.

It would also be possible to do the same for xs, by replacing

xs = x * x

x = xs - t

by the single instruction:

x = x * x - t

but on Haswell, that makes the cycle 17 clock cycles. This is not a problem on later processors - Skylake and onwards have a latency of 4 for addition, subtraction, multiplication and the fused instructions.

Trying each of these (with [[unlikely]], test call, add exponent:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| FMA on y*y | 112.6 | 8.3 | 5.1 | 410.2 |

| FMA on y*y and x*x | 112.7 | 8.3 | 5.1 | 411.0 |

This is a very small improvement, but the critical path is no longer the bottleneck.

Not checking the condition every iteration

So far, I have checked for divergence (\(x^2+y^2 > 4\)) every iteration. One optimizing technique is to not check for divergence every iteration, and check say every second, third or fourth one. That does reduce the amount of code that needs to be run per iteration (and several iterations will now happen in one loop), but does have the overhead that old values need to be stored, and once divergence is detected, the code needs to go back and iterate to find in which iteration the divergence occurred.

The inner loop now looks something like this (for the case of checking every two iterations):

while(true) {

if(test_inline2ux(mse, pp, width, iterations, s1_dx, s1_dy, s1_cmp2, s1_cx, s1_cy,

s1_x, s1_y, s1_x_squared, s1_y_squared, s1_mag, s1_count)) {

return;

}

if(test_inline2ux(mse, pp, width, iterations, s2_dx, s2_dy, s2_cmp2, s2_cx, s2_cy,

s2_x, s2_y, s2_x_squared, s2_y_squared, s2_mag, s2_count)) {

return;

}

// make a temp copy of y

__m256d s1_temp_y = s1_y;

__m256d s2_temp_y = s2_y;

// s1_y_squared = s1_y * s1_y;

s1_y_squared = _mm256_mul_pd(s1_y, s1_y);

s2_y_squared = _mm256_mul_pd(s2_y, s2_y);

// s1_x_squared = s1_x * s1_x;

s1_x_squared = _mm256_mul_pd(s1_x, s1_x);

s2_x_squared = _mm256_mul_pd(s2_x, s2_x);

// s1_y = 2 * s1_y * s1_x + s1_cy;

s1_cmp_with_four=AVX_CMP_GT_4_64(s1_mag, four_double);

s2_cmp_with_four=AVX_CMP_GT_4_64(s2_mag, four_double);

s1_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s1_x, a1exp), s1_y, s1_cy);

s2_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s2_x, a1exp), s2_y, s2_cy);

// s1_x = s1_x_squared - s1_y_squared + s1_cx;

s1_x = _mm256_sub_pd(s1_x_squared, _mm256_fmsub_pd(s1_temp_y, s1_temp_y, s1_cx));

s2_x = _mm256_sub_pd(s2_x_squared, _mm256_fmsub_pd(s2_temp_y, s2_temp_y, s2_cx));

// make a temp copy of y

__m256d s1b_temp_y = s1_y;

__m256d s2b_temp_y = s2_y;

// s1_mag = s1_x_squared + s1_y_squared;

s1_mag = _mm256_add_pd(s1_x_squared, s1_y_squared);

s2_mag = _mm256_add_pd(s2_x_squared, s2_y_squared);

// s1_x_squared = s1_x * s1_x;

s1_x_squared = _mm256_mul_pd(s1_x, s1_x);

s2_x_squared = _mm256_mul_pd(s2_x, s2_x);

// s1_y = 2 * s1_y * s1_x + s1_cy;

s1_cmp2 = _mm256_or_pd((__m256d)s1_count, s1_cmp_with_four);

s2_cmp2 = _mm256_or_pd((__m256d)s2_count, s2_cmp_with_four);

// Decrement counter

s1_count = _mm256_sub_epi64(s1_count, one_int64);

s2_count = _mm256_sub_epi64(s2_count, one_int64);

s1_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s1_x, a1exp), s1_y, s1_cy);

s2_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s2_x, a1exp), s2_y, s2_cy);

// s1_x = s1_x_squared - s1_y_squared + s1_cx;

s1_x = _mm256_sub_pd(s1_x_squared, _mm256_fmsub_pd(s1b_temp_y, s1b_temp_y, s1_cx));

s2_x = _mm256_sub_pd(s2_x_squared, _mm256_fmsub_pd(s2b_temp_y, s2b_temp_y, s2_cx));

}

There are several advantages to doing this:

-

As stated above, the test code needs to be run less often,

-

The code now gets some of the benefits of loop unrolling,

-

The test can be spread out over all the Mandelbrot iterations in the loop, so the sheep run is no longer necessary, which reduces the register pressure.

Note The next tests were done just to see if there was a possible useful speedup. There was no looking back to get the correct number of iterations, so the number of iterations will be an over-count. This was to save development time - if there was no speedup ever without the looking back, then looking back won’t make things faster and this approach can be abandoned with minimal extra effort.

| Number of iterations unrolled in loop | Test A | Test B | Test C | Test D |

|---|---|---|---|---|

| 2 | 103.6 | 7.6 | 4.7 | 377.7 |

| 3 | 100.4 | 7.3 | 4.5 | 365.7 |

| 4 | 98.4 | 7.1 | 4.4 | 358.7 |

This is promising, so I implemented the looking back and did some more tests. I also tried higher levels of software pipelining. #iterations is the number of iterations unrolled in the loop, #pipeline is the number calculations interleaved in the software pipeline.

| #iterations | #pipeline | Test A | Test B | Test C | Test D |

|---|---|---|---|---|---|

| 4 | 3 | 123.7 | 7.4 | 4.6 | 461.3 |

| 4 | 4 | 98.0 | 7.6 | 4.6 | 356.8 |

| 4 | 5 | 104.6 | 8.0 | 4.7 | 380.7 |

So four iterations in the loop with four calculations interleaved in the software pipelines looks like a winner.

Is this the fastest? There are now lots of things to vary, remembering that these variations can interact in surprising ways:

-

whether to use the integer comparison instruction,

-

whether to use the integer add to the exponent,

-

[[unlikely]]or not, -

whether to have the test be a macro or inlined call,

-

the number of calculations interleaved in the software pipeline

-

the number of iterations in a loop.

The one that looks like it might make the most difference is the test being a macro or inlined call, so testing that, the winner is the call:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| test macro | 100.4 | 7.7 | 4.8 | 364.0 |

| test call | 98.0 | 7.6 | 4.6 | 356.8 |

Best code so far

This has grown since the initial 10 line loop and can be found in the GitHub project as Mandelbrot_fastest_raw.cpp.

template<class M> inline __attribute__((always_inline)) bool test_diverge(M& mse,

iterations_t* pp, uint32_t width, iterations_t iterations,

uint32_t* dx, uint32_t* dy,

__m256d& cmp2,__m256d& cx,__m256d& cy,__m256d& x,__m256d& y,

__m256i& count, double* x_safe0, double* y_safe0, uint64_t* count_safe0,

double* x_safe1, double* y_safe1, uint64_t* count_safe1) {

if(!_mm256_testz_pd(cmp2, cmp2)) [[unlikely]] {

uint32_t mask = _mm256_movemask_pd(cmp2);

double cx_mem[4] __attribute__((__aligned__(32)));

double cy_mem[4] __attribute__((__aligned__(32)));

double x_mem[4] __attribute__((__aligned__(32)));

double y_mem[4] __attribute__((__aligned__(32)));

uint64_t count_mem[4] __attribute__((__aligned__(32)));

_mm256_store_pd(x_mem, x);

_mm256_store_pd(y_mem, y);

_mm256_store_si256((__m256i * )count_mem, count);

_mm256_store_pd(cx_mem, cx);

_mm256_store_pd(cy_mem, cy);

while(mask != 0) {

uint32_t b = __builtin_ctz(mask);

int64_t si=count_safe1[b];

double sx=x_safe1[b];

double sy=y_safe1[b];

double scx=cx_mem[b];

double scy=cy_mem[b];

while(si >= 0 && sx * sx + sy * sy <= 4.0) {

double nx = sx * sx - sy * sy + scx;

sy = 2.0 * sx * sy + scy;

sx = nx;

si--;

}

pp[dx[b] + dy[b] * width] = iterations - si;

if(!mse.get_next_point(cx_mem[b], cy_mem[b], dx[b], dy[b])) {

return true;

}

count_mem[b] = iterations;

x_mem[b] = y_mem[b] = 0;

//cmp2_mem[b] = 0;

mask = mask&~(1U<<b);

}

x = _mm256_load_pd(x_mem);

y = _mm256_load_pd(y_mem);

count = _mm256_load_si256((__m256i * )count_mem);

cx = _mm256_load_pd(cx_mem);

cy = _mm256_load_pd(cy_mem);

}

_mm256_store_pd(x_safe1,_mm256_load_pd(x_safe0));

_mm256_store_pd(x_safe0, x);

_mm256_store_pd(y_safe1,_mm256_load_pd(y_safe0));

_mm256_store_pd(y_safe0, y);

_mm256_store_si256((__m256i * )count_safe1,

_mm256_load_si256((__m256i * )count_safe0));

_mm256_store_si256((__m256i * )count_safe0, count);

return false;

}

inline __attribute__((always_inline)) void iterate4(

__m256d& s1_x, __m256d& s2_x, __m256d& s3_x, __m256d& s4_x,

__m256d& s1_y, __m256d& s2_y, __m256d& s3_y, __m256d& s4_y,

__m256d s1_cx, __m256d s2_cx, __m256d s3_cx, __m256d s4_cx,

__m256d s1_cy, __m256d s2_cy, __m256d s3_cy, __m256d s4_cy,

__m256i add_one_exp) {

__m256d s1b_temp_y = s1_y;

__m256d s2b_temp_y = s2_y;

__m256d s3b_temp_y = s3_y;

__m256d s4b_temp_y = s4_y;

// s1_x_squared = s1_x * s1_x;

__m256d s1_x_squared = _mm256_mul_pd(s1_x, s1_x);

__m256d s2_x_squared = _mm256_mul_pd(s2_x, s2_x);

__m256d s3_x_squared = _mm256_mul_pd(s3_x, s3_x);

__m256d s4_x_squared = _mm256_mul_pd(s4_x, s4_x);

// s1_y = 2 * s1_y * s1_x + s1_cy;

s1_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s1_x, add_one_exp),

s1_y, s1_cy);

s2_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s2_x, add_one_exp),

s2_y, s2_cy);

s3_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s3_x, add_one_exp),

s3_y, s3_cy);

s4_y = _mm256_fmadd_pd((__m256d)_mm256_add_epi64((__m256i)s4_x, add_one_exp),

s4_y, s4_cy);

// s1_x = s1_x_squared - s1_y_squared + s1_cx;

s1_x = _mm256_sub_pd(s1_x_squared, _mm256_fmsub_pd(s1b_temp_y, s1b_temp_y, s1_cx));

s2_x = _mm256_sub_pd(s2_x_squared, _mm256_fmsub_pd(s2b_temp_y, s2b_temp_y, s2_cx));

s3_x = _mm256_sub_pd(s3_x_squared, _mm256_fmsub_pd(s3b_temp_y, s3b_temp_y, s3_cx));

s4_x = _mm256_sub_pd(s4_x_squared, _mm256_fmsub_pd(s4b_temp_y, s4b_temp_y, s4_cx));

}

template<class M> void Mandelbrot::fastest_so_far(iterations_t iterations,

uint32_t render_x, uint32_t render_y,

uint32_t render_width, uint32_t render_height) {

if(render_width == 0) {

render_width = width - render_x;

}

if(render_height == 0) {

render_height = height - render_y;

}

M mse(p, width, height, render_x, render_y,

render_width, render_height, xs, ys, inc, iterations);

iterations_t* pp = mse.p;

reset(pp, width, render_width, render_height);

iterations--;

__m256d s1_x = _mm256_setzero_pd();

__m256d s1_y = _mm256_setzero_pd();

__m256i s1_count = _mm256_set1_epi64x(iterations);

__m256d s1_cmp_with_four = _mm256_setzero_pd();

__m256d s1_cmp2 = _mm256_setzero_pd();

__m256d s2_x = _mm256_setzero_pd();

__m256d s2_y = _mm256_setzero_pd();

__m256i s2_count = _mm256_set1_epi64x(iterations);

__m256d s2_cmp_with_four = _mm256_setzero_pd();

__m256d s2_cmp2 = _mm256_setzero_pd();

__m256d s3_x = _mm256_setzero_pd();

__m256d s3_y = _mm256_setzero_pd();

__m256i s3_count = _mm256_set1_epi64x(iterations);

__m256d s3_cmp_with_four = _mm256_setzero_pd();

__m256d s3_cmp2 = _mm256_setzero_pd();

__m256d s4_x = _mm256_setzero_pd();

__m256d s4_y = _mm256_setzero_pd();

__m256i s4_count = _mm256_set1_epi64x(iterations);

__m256d s4_cmp_with_four = _mm256_setzero_pd();

__m256d s4_cmp2 = _mm256_setzero_pd();

__m256i one_int64 = _mm256_set1_epi64x(4);

__m256d four_double = _mm256_set1_pd(4.0);

__m256i add_one_exp = _mm256_set1_epi64x(0x10000000000000);

uint32_t s1_dx[4] = {}, s1_dy[4] = {};

uint32_t s2_dx[4] = {}, s2_dy[4] = {};

uint32_t s3_dx[4] = {}, s3_dy[4] = {};

uint32_t s4_dx[4] = {}, s4_dy[4] = {};

double cx_mem[4] __attribute__((__aligned__(32)));

double cy_mem[4] __attribute__((__aligned__(32)));

mse.get_next_point(cx_mem[0], cy_mem[0], s1_dx[0], s1_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s1_dx[1], s1_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s1_dx[2], s1_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s1_dx[3], s1_dy[3]);

__m256d s1_cx = *(__m256d * )cx_mem;

__m256d s1_cy = *(__m256d * )cy_mem;

mse.get_next_point(cx_mem[0], cy_mem[0], s2_dx[0], s2_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s2_dx[1], s2_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s2_dx[2], s2_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s2_dx[3], s2_dy[3]);

__m256d s2_cx = *(__m256d * )cx_mem;

__m256d s2_cy = *(__m256d * )cy_mem;

mse.get_next_point(cx_mem[0], cy_mem[0], s3_dx[0], s3_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s3_dx[1], s3_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s3_dx[2], s3_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s3_dx[3], s3_dy[3]);

__m256d s3_cx = *(__m256d * )cx_mem;

__m256d s3_cy = *(__m256d * )cy_mem;

mse.get_next_point(cx_mem[0], cy_mem[0], s4_dx[0], s4_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s4_dx[1], s4_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s4_dx[2], s4_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s4_dx[3], s4_dy[3]);

__m256d s4_cx = *(__m256d * )cx_mem;

__m256d s4_cy = *(__m256d * )cy_mem;

double s1_x_safe0[4] __attribute__((__aligned__(32))) = {};

double s2_x_safe0[4] __attribute__((__aligned__(32))) = {};

double s3_x_safe0[4] __attribute__((__aligned__(32))) = {};

double s4_x_safe0[4] __attribute__((__aligned__(32))) = {};

double s1_y_safe0[4] __attribute__((__aligned__(32))) = {};

double s2_y_safe0[4] __attribute__((__aligned__(32))) = {};

double s3_y_safe0[4] __attribute__((__aligned__(32))) = {};

double s4_y_safe0[4] __attribute__((__aligned__(32))) = {};

uint64_t s1_count_safe0[4] __attribute__((__aligned__(32))) = {};

uint64_t s2_count_safe0[4] __attribute__((__aligned__(32))) = {};

uint64_t s3_count_safe0[4] __attribute__((__aligned__(32))) = {};

uint64_t s4_count_safe0[4] __attribute__((__aligned__(32))) = {};

double s1_x_safe1[4] __attribute__((__aligned__(32))) = {};

double s2_x_safe1[4] __attribute__((__aligned__(32))) = {};

double s3_x_safe1[4] __attribute__((__aligned__(32))) = {};

double s4_x_safe1[4] __attribute__((__aligned__(32))) = {};

double s1_y_safe1[4] __attribute__((__aligned__(32))) = {};

double s2_y_safe1[4] __attribute__((__aligned__(32))) = {};

double s3_y_safe1[4] __attribute__((__aligned__(32))) = {};

double s4_y_safe1[4] __attribute__((__aligned__(32))) = {};

uint64_t s1_count_safe1[4] __attribute__((__aligned__(32))) = {};

uint64_t s2_count_safe1[4] __attribute__((__aligned__(32))) = {};

uint64_t s3_count_safe1[4] __attribute__((__aligned__(32))) = {};

uint64_t s4_count_safe1[4] __attribute__((__aligned__(32))) = {};

while(true) {

if(test_diverge(mse,pp,width,iterations,s1_dx,s1_dy,s1_cmp2,s1_cx,s1_cy,

s1_x,s1_y,s1_count,

s1_x_safe0,s1_y_safe0,s1_count_safe0,

s1_x_safe1,s1_y_safe1,s1_count_safe1)) {

return;

}

if(test_diverge(mse,pp,width,iterations,s2_dx,s2_dy,s2_cmp2,s2_cx,s2_cy,

s2_x,s2_y,s2_count,

s2_x_safe0,s2_y_safe0,s2_count_safe0,

s2_x_safe1,s2_y_safe1,s2_count_safe1)) {

return;

}

if(test_diverge(mse,pp,width,iterations,s3_dx,s3_dy,s3_cmp2,s3_cx,s3_cy,

s3_x,s3_y,s3_count,

s3_x_safe0,s3_y_safe0,s3_count_safe0,

s3_x_safe1,s3_y_safe1,s3_count_safe1)) {

return;

}

if(test_diverge(mse,pp,width,iterations,s4_dx,s4_dy,s4_cmp2,s4_cx,s4_cy,

s4_x,s4_y,s4_count,

s4_x_safe0,s4_y_safe0,s4_count_safe0,

s4_x_safe1,s4_y_safe1,s4_count_safe1)) {

return;

}

// s1_x_squared = s1_x * s1_x;

__m256d s1_x_squared = _mm256_mul_pd(s1_x, s1_x);

__m256d s2_x_squared = _mm256_mul_pd(s2_x, s2_x);

__m256d s3_x_squared = _mm256_mul_pd(s3_x, s3_x);

__m256d s4_x_squared = _mm256_mul_pd(s4_x, s4_x);

// s1_y_squared = s1_y * s1_y;

__m256d s1_y_squared = _mm256_mul_pd(s1_y, s1_y);

__m256d s2_y_squared = _mm256_mul_pd(s2_y, s2_y);

__m256d s3_y_squared = _mm256_mul_pd(s3_y, s3_y);

__m256d s4_y_squared = _mm256_mul_pd(s4_y, s4_y);

// s1_y = 2 * s1_y * s1_x + s1_cy;

iterate4(s1_x, s2_x, s3_x, s4_x, s1_y, s2_y, s3_y, s4_y,

s1_cx, s2_cx, s3_cx, s4_cx, s1_cy, s2_cy, s3_cy, s4_cy,

add_one_exp);

// s1_mag = s1_x_squared + s1_y_squared;

__m256d s1_mag = _mm256_add_pd(s1_x_squared, s1_y_squared);

__m256d s2_mag = _mm256_add_pd(s2_x_squared, s2_y_squared);

__m256d s3_mag = _mm256_add_pd(s3_x_squared, s3_y_squared);

__m256d s4_mag = _mm256_add_pd(s4_x_squared, s4_y_squared);

iterate4(s1_x, s2_x, s3_x, s4_x, s1_y, s2_y, s3_y, s4_y,

s1_cx, s2_cx, s3_cx, s4_cx, s1_cy, s2_cy, s3_cy, s4_cy,

add_one_exp);

s1_cmp_with_four=AVX_CMP_GT_4_64(s1_mag, four_double);

s2_cmp_with_four=AVX_CMP_GT_4_64(s2_mag, four_double);

s3_cmp_with_four=AVX_CMP_GT_4_64(s3_mag, four_double);

s4_cmp_with_four=AVX_CMP_GT_4_64(s4_mag, four_double);

iterate4(s1_x, s2_x, s3_x, s4_x, s1_y, s2_y, s3_y, s4_y,

s1_cx, s2_cx, s3_cx, s4_cx, s1_cy, s2_cy, s3_cy, s4_cy,

add_one_exp);

s1_cmp2 = _mm256_or_pd((__m256d)s1_count, s1_cmp_with_four);

s2_cmp2 = _mm256_or_pd((__m256d)s2_count, s2_cmp_with_four);

s3_cmp2 = _mm256_or_pd((__m256d)s3_count, s3_cmp_with_four);

s4_cmp2 = _mm256_or_pd((__m256d)s4_count, s4_cmp_with_four);

// Decrement counter

s1_count = _mm256_sub_epi64(s1_count, one_int64);

s2_count = _mm256_sub_epi64(s2_count, one_int64);

s3_count = _mm256_sub_epi64(s3_count, one_int64);

s4_count = _mm256_sub_epi64(s4_count, one_int64);

iterate4(s1_x, s2_x, s3_x, s4_x, s1_y, s2_y, s3_y, s4_y,

s1_cx, s2_cx, s3_cx, s4_cx, s1_cy, s2_cy, s3_cy, s4_cy,

add_one_exp);

}

}

Comparing that with our initial version:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| naive | 973.4 | 68.7 | 41.2 | 3550.7 |

| best from part 1 | 119.7 | 8.8 | 5.4 | 436.8 |

| best current | 98.0 | 7.6 | 4.6 | 356.8 |

All that extra effort, and the speedup has gone from ~8x to just under 10x. This is a good illustration of diminishing returns : I put much more effort into this than I did into part 1, and only get a 1.22x extra speed increase.

Cost of looking back

A final note in this section: I ran the tests again, taking the looking back step out. I just removed the following code:

double sx=x_safe1[b];

double sy=y_safe1[b];

double scx=cx_mem[b];

double scy=cy_mem[b];

while(si >= 0 && sx * sx + sy * sy <= 4.0) {

double nx = sx * sx - sy * sy + scx;

sy = 2.0 * sx * sy + scy;

sx = nx;

si--;

}

to leave:

template<class M> inline __attribute__((always_inline)) bool test_diverge_noadjust(M& mse,

iterations_t* pp, uint32_t width, iterations_t iterations,

uint32_t* dx, uint32_t* dy,

__m256d& cmp2,__m256d& cx,__m256d& cy,__m256d& x,__m256d& y,

__m256i& count, double* x_safe0, double* y_safe0, uint64_t* count_safe0,

double* x_safe1, double* y_safe1, uint64_t* count_safe1) {

if(!_mm256_testz_pd(cmp2, cmp2)) [[unlikely]] {

uint32_t mask = _mm256_movemask_pd(cmp2);

double cx_mem[4] __attribute__((__aligned__(32)));

double cy_mem[4] __attribute__((__aligned__(32)));

double x_mem[4] __attribute__((__aligned__(32)));

double y_mem[4] __attribute__((__aligned__(32)));

uint64_t count_mem[4] __attribute__((__aligned__(32)));

_mm256_store_pd(x_mem, x);

_mm256_store_pd(y_mem, y);

_mm256_store_si256((__m256i * )count_mem, count);

_mm256_store_pd(cx_mem, cx);

_mm256_store_pd(cy_mem, cy);

while(mask != 0) {

uint32_t b = __builtin_ctz(mask);

int64_t si=count_safe1[b];

//double sx=x_safe1[b];

//double sy=y_safe1[b];

//double scx=cx_mem[b];

//double scy=cy_mem[b];

//while(si >= 0 && sx * sx + sy * sy <= 4.0) {

// double nx = sx * sx - sy * sy + scx;

// sy = 2.0 * sx * sy + scy;

// sx = nx;

// si--;

//}

pp[dx[b] + dy[b] * width] = iterations - si;

if(!mse.get_next_point(cx_mem[b], cy_mem[b], dx[b], dy[b])) {

return true;

}

count_mem[b] = iterations;

x_mem[b] = y_mem[b] = 0;

//cmp2_mem[b] = 0;

mask = mask&~(1U<<b);

}

x = _mm256_load_pd(x_mem);

y = _mm256_load_pd(y_mem);

count = _mm256_load_si256((__m256i * )count_mem);

cx = _mm256_load_pd(cx_mem);

cy = _mm256_load_pd(cy_mem);

}

_mm256_store_pd(x_safe1,_mm256_load_pd(x_safe0));

_mm256_store_pd(x_safe0, x);

_mm256_store_pd(y_safe1,_mm256_load_pd(y_safe0));

_mm256_store_pd(y_safe0, y);

_mm256_store_si256((__m256i * )count_safe1,

_mm256_load_si256((__m256i * )count_safe0));

_mm256_store_si256((__m256i * )count_safe0, count);

return false;

}

This gave a large speed boost (showing the last results for comparison):

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| test macro | 100.4 | 7.7 | 4.8 | 364.0 |

| test call | 98.0 | 7.6 | 4.6 | 356.8 |

| test macro (no lookback) | 79.5 | 6.0 | 3.6 | 289.3 |

| test call (no lookback) | 80.3 | 6.0 | 3.8 | 292.2 |

This is a relatively huge speedup for removing code that is not called very much. My best guess is that that either the extra loop causes register pressure that results in spills elsewhere, or some internal CPU caching resource fills up and there is some thrashing going on. More investigation would be needed to determine this.

To get much more speedup would probably involve going to assembler (which I’m not planning on doing anytime soon) - but the results here would not be wasted, as they would provide a very good starting point for that. If I’d jumped into assembler right away, this exploration would have been much more difficult.

Point pruning

Now that I have done as much as I’m going to do for now with the iteration loop, is there any way that I can require less points to be calculated in the first place? There are several options that present themselves.

Eliminating main cardioid and main circle

It is possible to mathematically characterize the main cardioid and the largest circle and not need to iterate points inside them. A point is in the

-

cardioid if \(q(q+(x-\frac{1}{4})) \leq \frac{1}{4}y^2\) where \(q=(x-\frac{1}{4})^2+y^2\),

-

left circle if \((x+1)^2+y^2 \leq \frac{1}{16}\).

It is also possible to map circles inside some of the other bulbs.

This is an inexpensive check, but as I’m optimizing for deeper zoom levels (as deep as can reasonably go with double precision), most interesting images at higher zoom levels won’t overlap with any of those areas, and a later technique (contour following) will deal with this adequately, so this optimization doesn’t fit my use case.

Four corners

I’ve seen some references to testing four corners of a rectangle, and filling the rectangle in if they are the same value, but this creates artifacts unless the rectangles are very small, as it is very easy to find cases where the four corners are the same, but there is structure inside. I’m not comfortable with that level of inaccuracy.

Rectangle checking and subdivision

A somewhat better check is to test all the boundary points of a rectangle, and if all the points on the edge of the rectangle are the same, the fill the whole rectangle in with that color. If they are not the same, then subdivide the rectangle (either into halves or quarters), calculate the points along the new boundary, and retest. Continue until all the rectangles are filled in or there are no interior points left.

This will result in significant performance improvements in most cases without a high risk of artifacts, but is not as performant as contour following.

Contour following

This is more complex to code than rectangle subdivision, but all points calculated by contour following is a subset of those calculated by rectangle subdivision. Given that point calculation is still an expensive operation, it is worth the extra complexity to implement contour following to calculate the minimal number of points.

The basic method is that whenever a pixel as calculated (say the red one) and has neighboring (horizontal or vertical, not diagonal) pixel that is different (say the blue one), calculate the points that could belong along an edge if they aren’t already calculated (shown as white squares in the diagram above), and repeat this checks until there are no further checks to make.

Then fill in the remaining areas with whatever surrounds them.

There is a bit more to it than that:

-

The initial conditions have to be set up,

-

Normally the contour following would be the the outside loop and the iterating would be the inner loop, but I have reversed it, so the contour following has to be built into the state engine.

-

One of the properties of the pipeline that results don’t come out in the order that points went in, so the algorithm can’t assume that.

The algorithm is informally to calculate all the boundary points of the image, and where this is a difference from the neighbor, add the corresponding points inside the boundary to a todo set. When this is all done, generate each point in the todo set, adding to the list as more checks are done.

Written out more carefully, where ‘Return’ indicates to the caller to keep going and ‘Done’ indicates that there are no more points to get:

Each time get_next_point is called:

If the border has not been calculated yet,

Add another point from the border

Return

If the border points haven't been flushed from the the pipeline

Add a 'no-operation'

Return

If this is the first time this point in the algorithm has been reached,

For each of the points next to the border:

If next to a point with a different neighbor

Add to the todo set

If todo set is empty

Done

Remove a point from the todo set, add to the pipeline

Return

Take the point just calculated and add any appropriate surrounding points to todo set

If todo set is empty

If the pipeline hasn't been flushed

Add a 'no-operation'

Return

Done

Remove a point from the todo set, add to the pipeline

At the end, fill in any uncalculated points with value they are surrounded by.

Some notes here:

-

It is important to flush the pipeline, both after border generation and at the end,

-

When a point is added to the todo set, it is marked as ‘generating’. When adding points to the todo set, points that have been calculated or marked as generating don’t get included.

-

I haven’t described how the todo set is set up, and what order points are taken from it, and for completion of the algorithm it doesn’t matter. Two obvious contenders are a FIFO queue, or a LIFO stack (implemented as a vector). The queue will use less memory if set up correctly (in this case it just spirals in from the outside), and the stack is easier to implement and has better memory locality, and indeed runs very slightly faster. I went with a stack, but there would be little difference with any reasonable structure.

Having implemented this (code is below, there is one more thing to do before I present it), I decide to generate a zoomed out thumbnail (zoom=0.225):

What went wrong? The whole Mandelbrot set should be in there.

The problem with any contour or edge following algorithms is that of islands, points or sets of points surrounded by points of the same value. For instance, in the following diagram, the blue points will be missed, because they will never get added to the todo set.

In the next diagram, all the points will be picked up - no islands here.

The general way of dealing with islands is to make sure that sufficient points are added to the todo set at the start. The Mandelbrot Set has a mathematical property called ‘connected’ which (loosely) means that all parts are attached to all other parts - there are no islands except for the whole set. In practice, because I am only checking at discrete points, there may be small features that function as islands at a particular zoom level, but that should be uncommon. I have not observed any of these.

The simplest way to make sure that the Mandelbrot set is not made into an island is: if the area to be rendered contains (0,0), then add a line segment of points from (0,0) to the right hand edge (I go this direction because it is the shortest way out of the expensive black points), like so:

Add this and try again:

Here is the code with the (0,0) check included, on GitHub project as MandelbrotStateEngineEdgeFollow.h, called from Mandelbrot_fastest_contour.cpp.

const iterations_t MAX = std::numeric_limits<iterations_t>::max();

inline void setup_point(double xs, double ys, double inc, double& px, double& py,

uint32_t& x, uint32_t& y, uint32_t w, uint32_t h) {

x = w;

y = h;

px = xs + w * inc;

py = ys - h * inc;

}

struct Point {

Point(uint16_t px, uint16_t py) {x = px;y = py;};

uint16_t x,y;

};

class MandelbrotStateEngineContourFollow {

public:

MandelbrotStateEngineEdgeFollow(iterations_t* pp,

uint32_t pfullwidth, int32_t pfullheight,

uint32_t prender_x, uint32_t prender_y,

uint32_t pwidth, uint32_t pheight,

double pxs, double pys, double pinc, uint32_t) {

fullwidth = pfullwidth;

p = pp + prender_x + fullwidth * prender_y;

width = pwidth;

height = pheight;

dummy_offset = (pfullheight - prender_y - height) * fullwidth - prender_x;

inc = pinc;

xs = pxs + prender_x * inc;

ys = pys - prender_y * inc;

tail = 15;

boundaries_done = false;

wtop = 0;

wbottom = 0;

hleft = 1;

hright = 1;

todo_list.clear();

};

inline void add_to_todo_list(uint32_t x, uint32_t y) {

todo_list.push_back(Point(x,y));

p[x + y * fullwidth] = MAX;

}

bool get_next_point(double& px, double& py, uint32_t&x, uint32_t& y) {

if(!boundaries_done) {

if(wtop<width) {

setup_point(xs,ys,inc,px,py,x,y,wtop,0);

wtop++;

return true;

} else if(wbottom<width) {

setup_point(xs,ys,inc,px,py,x,y,wbottom,height - 1);

wbottom++;

return true;

} else if(hleft<height - 1) {

setup_point(xs,ys,inc,px,py,x,y,0,hleft);

hleft++;

return true;

} else if(hright<height - 1) {

setup_point(xs,ys,inc,px,py,x,y,width - 1,hright);

hright++;

return true;

} else if(tail != 0) {

tail--;

px = py = 0;

x = 0;

y = height;

return true;

}

if(todo_list.empty()) {

// setup initial list of points

for(uint32_t i = 1;i < width - 1;i++) {

iterations_t* q = &p[i + fullwidth];

iterations_t* qq = q - fullwidth;

if(qq[ - 1] != qq[0] || qq[1] != qq[0]) {

add_to_todo_list(i,1);

}

q = &p[i + fullwidth * (height - 2)];

qq = q + fullwidth;

if(qq[ - 1] != qq[0] || qq[1] != qq[0]) {

add_to_todo_list(i,height - 2);

}

}

for(uint32_t j = 1;j < height - 1;j++) {

iterations_t* q = &p[j * fullwidth + 1];

iterations_t* qq = q - 1;

if(qq[ - fullwidth] != qq[0]

|| qq[fullwidth] != qq[0]) {

add_to_todo_list(1,j);

}

q = &p[j * fullwidth + (width - 2)];

qq = q + 1;

if(qq[ - fullwidth] != qq[0]

|| qq[fullwidth] != qq[0]) {

add_to_todo_list(width - 2,j);

}

}

// Deal with the 'Mandelbrot is an island' case

if(xs < 0.0 && xs + width * inc > 0.0

&& ys > 0.0 && ys - height * inc < 0.0) {

// run a set of todo's between origin and the right

// Choose x,y corresponding to 0

uint32_t iy=ys/inc;

if(iy>0 && iy<height) {

uint32_t ix=-xs/inc;

uint32_t s;

for(uint32_t i = s; i < width - 1; i++) {

add_to_todo_list(i,iy);

}

}

}

}

if(todo_list.empty()) {

return false;

}

Point point = todo_list.back();

todo_list.pop_back();

setup_point(xs,ys,inc,px,py,x,y,point.x,point.y);

boundaries_done = true;

return true;

}

if(y<height) {

iterations_t* lastp = p + x + y * fullwidth;

iterations_t val = lastp[0];

const int64_t L = - 1;

const int64_t R = 1;

int64_t U = - fullwidth;

int64_t D = fullwidth;

bool diff0, diff1;

diff0 = (lastp[L] != val) && (lastp[L] != 0) && (lastp[L] != MAX);

diff1 = (lastp[R] != val) && (lastp[R] != 0) && (lastp[R] != MAX);

if(diff0) {

if(lastp[U + L] == 0) {

add_to_todo_list(x - 1,y - 1);

}

if(lastp[D + L] == 0) {

add_to_todo_list(x - 1,y + 1);

}

}

if(diff1) {

if(lastp[U + R] == 0) {

add_to_todo_list(x + 1,y - 1);

}

if(lastp[D + R] == 0) {

add_to_todo_list(x + 1,y + 1);

}

}

if(diff0 || diff1) {

if(lastp[U] == 0) {

add_to_todo_list(x,y - 1);

}

if(lastp[D] == 0) {

add_to_todo_list(x,y + 1);

}

}

diff0 = (lastp[U] != val) && (lastp[U] != 0) && (lastp[U] != MAX);

diff1 = (lastp[D] != val) && (lastp[D] != 0) && (lastp[D] != MAX);

if(diff0) {

if(lastp[U + L] == 0) {

add_to_todo_list(x - 1,y - 1);

}

if(lastp[U + R] == 0) {

add_to_todo_list(x + 1,y - 1);

}

}

if(diff1) {

if(lastp[D + L] == 0) {

add_to_todo_list(x - 1,y + 1);

}

if(lastp[D + R] == 0) {

add_to_todo_list(x + 1,y + 1);

}

}

if(diff0 || diff1) {

if(lastp[L] == 0) {

add_to_todo_list(x - 1, y);

}

if(lastp[R] == 0) {

add_to_todo_list(x + 1, y);

}

}

}

while(!todo_list.empty()) {

Point point = todo_list.back();

todo_list.pop_back();

setup_point(xs,ys,inc,px,py,x,y,point.x,point.y);

tail = 15;

return true;

}

if(tail == 0) {

return false;

}

tail--;

px = py = 0;

x = 0;

y = height;

return true;

}

void cleanup() {

fill<iterations_t>(p,fullwidth,width,height);

}

public:

iterations_t* p;

private:

int32_t fullwidth;

uint32_t width;

uint32_t height;

int32_t dummy_offset;

double xs;

double ys;

double inc;

uint32_t tail;

bool boundaries_done;

uint32_t wtop;

uint32_t wbottom;

uint32_t hleft;

uint32_t hright;

std::vector<Point> todo_list;

};

This is the fill function:

template <typename T> void fill(T* p, uint32_t stride, uint32_t width, uint32_t height) {

for(uint32_t j = 1;j<height - 1;j++) {

T* py = &p[stride * j];

T val = py[0];

for(uint32_t i = 1;i<width - 1;i++) {

if(py[i] == 0) {

py[i] = val;

} else {

val = py[i];

}

}

}

}

I was all ready to jump in with creating a template specialization with AVX for a faster fill, but it turns out that the time taken is in the range 0.0005-0.001 seconds, so that would be an unnecessary optimization.

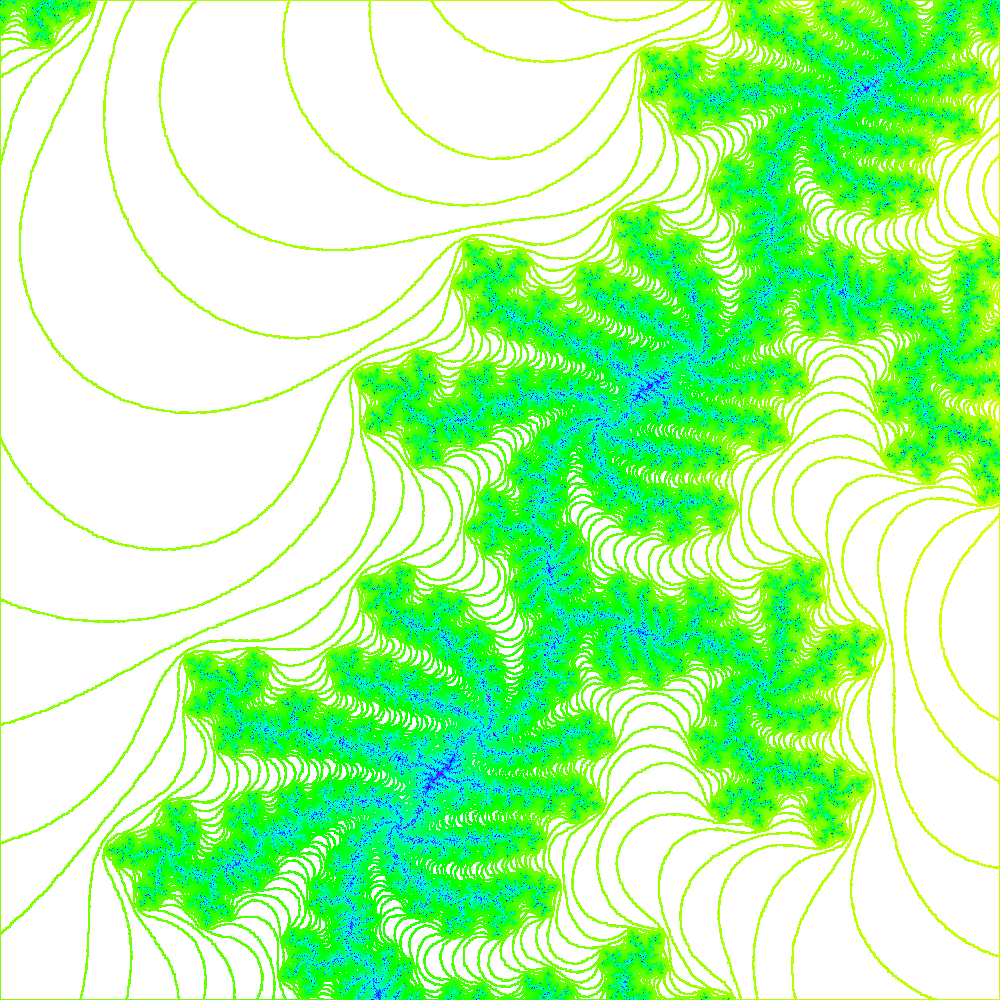

Here is what test C looks like before fill:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| best non-contour following | 98.0 | 7.6 | 4.6 | 356.8 |

| contour following, 3 iterations | 14.04 | 6.86 | 2.04 | 1.98 |

| contour following, 4 iterations (best) | 13.07 | 7.13 | 2.23 | 1.48 |

For test B, contour following makes very little difference because nearly all the points need to be calculated anyway. Test D is somewhat of a outlier - it was really only included to measure the raw loop performance, so contour following just has to calculate the boundary.

Multi-threading

TL;DR version - there is nothing surprising here, but it does provide a significant performance gain.

Mandelbrot Set generation is what is known as “embarrassingly parallel” in that little effort is required to parallelize the problem.

To multi-thread this, there are some things that do need to be done - the code needs to be designed so that the image can be tiled into several tasks. This has already been done above in the function signature:

template<class M> void Mandelbrot::fastest_so_far(iterations_t iterations,

uint32_t render_x, uint32_t render_y,

uint32_t render_width, uint32_t render_height) {

...

}

The other things that need to be done are to set up the threads (C++-20 makes this easy), and decide how many tasks and how many threads there are. Typically I like to have more tasks than there are threads and have each thread grab a new task as it finishes the last one - this will balance things better if the work per task is uneven. Too many tasks though will add to the overhead, both of the threading, and in my case having edges calculated twice.

The threading code is simple, the main thing to take care of is to have access to the job list guarded by a mutex and make sure the bulk of the processing happens outside the mutex lock (all standard stuff). There is no need to have any locks on the array being written to. Full version in the GitHub project as Mandelbrot.cpp.

void task(Mandelbrot* m, std::mutex* lock, std::vector<DisplayParams>* job_list,

uint32_t iterations) {

while(true) {

lock->lock();

if(job_list->empty()) {

lock->unlock();

return;

}

DisplayParams dp=job_list->back();

job_list->pop_back();

lock->unlock();

m->fastest_so_far<MandelbrotStateEngineEdgeFollow>(iterations,dp.x,dp.y,

dp.width,dp.height);

}

}

void Mandelbrot::render_multithreaded(uint32_t iterations, uint32_t thread_count,

uint32_t divx, uint32_t divy) {

std::vector<DisplayParams> job_list;

std::unique_ptr<std::thread[]> threads =

std::make_unique<std::thread[]>(thread_count);

std::mutex lock;

if(divy==0) {

divy=divx;

}

for(uint32_t j=0;j<divy;j++) {

for(uint32_t i=0;i<divx;i++) {

DisplayParams dp;

dp.x=(width*i)/divx;

dp.y=(height*j)/divy;

dp.width=(width*(i+1))/divx-(width*i)/divx;

dp.height=(height*(j+1))/divy-(height*j)/divy;

job_list.push_back(dp);

}

}

for(uint32_t i=0;i<thread_count;i++) {

threads[i]=std::thread(&task,this,&lock,&job_list,iterations);

}

for(uint32_t i=0;i<thread_count;i++) {

threads[i].join();

}

}

Timing results with 16 tasks:

| Number of threads | Test A | Test B | Test C | Test D |

|---|---|---|---|---|

| unthreaded | 13.07 | 7.13 | 2.23 | 1.48 |

| 1 | 14.29 | 7.18 | 2.46 | 5.27 |

| 2 | 7.58 | 3.70 | 1.29 | 2.73 |

| 3 | 7.41 | 3.59 | 1.19 | 2.72 |

| 4 | 7.25 | 3.47 | 1.19 | 2.57 |

As expected, 1 thread costs more than unthreaded because of the all the extra point calculations along the diving edges - this overhead is only large in test D, which is somewhat of an outlier anyway.

Note: It would be possible to reduce some of this overhead to get slightly faster timing by first calculating all the common edges between the tiles, then the tiles.

Times are nearly halved for 2 threads (there is always Operating System overhead, so timing is never quite halved for doubling the number of threads.

For 3 and 4 threads, there are only small gains. Why is this? I’m running on a Intel(R) Core(TM) i5-4340M CPU, which has 2 cores and 4 total threads (the other 2 threads are from something known as Hyper-Threading, where each core has two threads sharing the resources), and each thread makes pretty good use of the floating point resources, so adding a competing thread doesn’t gain much.

Distributed Computing

This would not seem complete without at least a mention of distributing the task over a network of computers.

In short, given that the slowest test case runs in ~0.37 seconds on slow hardware, it’s not likely to be worth doing.

If the parameters were changed so that it was considered worth doing (say, generating a particularly enormous image), the problems of distributing it would be similar to that multi-threading (i.e nearly none), with the additional step of stitching the images together at the end.

Conclusion

Remembering to divide the timing numbers by 20 to get the timing for a single run, I now have my slowest test case (A) generating a 1000x1000 Mandelbrot Set in ~0.37 seconds, down from 48.6 seconds.

These numbers were generated on quite an old processor. Modern processors should do somewhat better (particularly if they have more cores).

Comparing this with the naive implementation right at the start of part 1:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| naive | 973.4 | 68.7 | 41.2 | 3550.7 |

| 4 threads | 7.25 | 3.47 | 1.19 | 2.57 |

| speed multiplier | 134 | 19.8 | 34.6 | 1380 |

So using techniques at several levels (using knowledge of the architecture to micro-optimize, an algorithmic approach to only needing to calculate some of the points, and multi-threading) I have managed to get a speed improvement of about 20-130x (leaving out test D as an outlier). Looking at only some of those levels would give a much less performant result.

Here is a rough breakdown of of how much each technique contributes to the best result:

| multiplier | notes | |

|---|---|---|

| Loop optimizations | ~10 | |

| Contour following | ~1-7 | depends on the complexity of the region |

| Software pipelining (2) | ~2 | number of physical cores |

| Total | ~20-130 |

Apart from vectorization and software pipelining using the same framework with pipelining and a state engine, these are largely independent of each other.

I’m sure that this is not the final improvement that could be made in this area - I welcome any other suggestions. Any comments, corrections, observations, or optimizations that I missed? Please feel free to contact me