Introduction

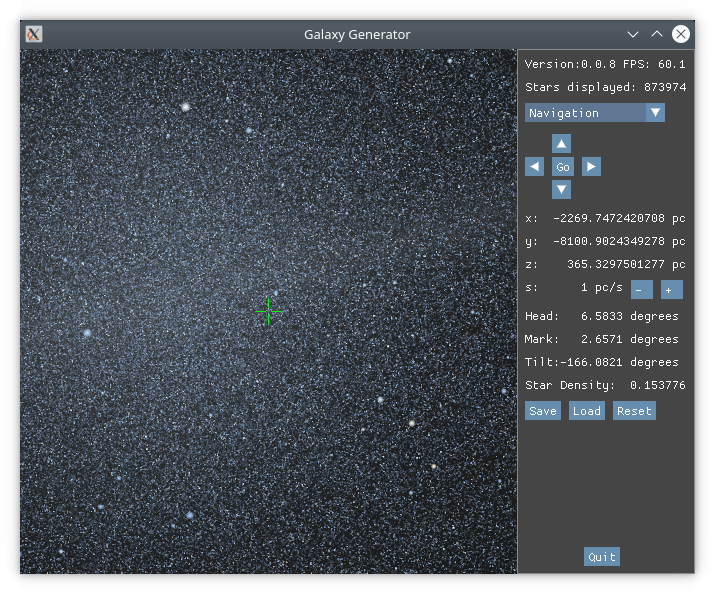

I have been working for some time on a Galaxy Generator project that procedurally generates and allows navigation in a 400 billion star galaxy. Stars are generated/removed as they enter/leave visual range. One of the performance bottlenecks is generating that data (which can be millions of stars per second) and getting it to the GPU for rendering. This post investigates doing that efficiently.

First I look at how a star is modeled and what information needs to be sent to the GPU. This is followed by my efforts to squeeze this into a small an amount of memory as reasonably possible (16 bytes). I finish with some notes about how this can be sped up at the CPU end.

Recent builds of the Galaxy Generator for Android, Linux and Windows can be downloaded here.

Contents

- Introduction

- Contents

- Requirements

- Modeling a star

- Packing a star into 16 bytes

- Some CPU notes

- Conclusion

Requirements

The Galaxy Generator is written using C++ and Vulkan, and primarily targeted at Android phones, although there are Linux and Windows versions too. Being designed for a phone means it needs to run on lower end hardware. In particular:

-

I want to support any GPU that can run Vulkan 1.0.x (assuming the OS is recent enough). This may include GPUs that are integrated with the CPU.

-

The GPU is not required to support double precision - single precision floating point is fine (actually, it’s not really enough, but I am stuck with it, and there are techniques to mitigate this).

-

I want to support any 64-bit ARM (ARMv8-A or later).

-

I want to support any x86-64 bit CPU that supports SSE4.1 and BMI2 (Haswell, Excavator or later). I may later remove the BMI2 requirement to support even older processors.

-

It should run in relatively small amounts of memory - I don’t want the application RAM required to exceed 2 GB.

-

It should be reasonably performant. 60 frames/second is not necessary, but I want it to be useable.

-

In addition to the star information described below being passed to the GPU, I also want to have about 8 bits set aside for flags and future expansion.

-

All C++ code has to compile without warnings with -Wall and -Wextra set on g++ (Linux), clang++ (Android and Linux) and with most of the warnings turned on on Visual Studio (Windows).

Modeling a star

The first thing to do is to decide what information is needed to represent a star.

Some physics

It is not necessary to read this section to follow the rest of this post - feel free to jump to the result

A star that is sufficiently far away can be approximately modeled as a [black body], so all that is needed to render it is the position, radius and temperature (this ignores such things as movement, absorption lines, variability, occlusion by companions, planets and dust). The radius only contributes to how luminous the star is (I am going to assume that ‘sufficiently far’ means it can be treated as a point source), and the temperature contributes to the luminosity and color. So to display a star, there is a mapping from (radius, temperature) -> (luminosity, RGB color). The displayed brightness is function of luminosity and distance.

How to perform this mapping? There are some heuristic ways of doing it, but (with apologies to any physicists or color theorists who might be reading this for the assumptions that I’m going to make) I go back to Plank’s law, partly because it feels cleaner, and partly because then it allows selecting the frequencies to view the stars - for instance, I can set a channel to look at the ultra-violet radiation and display that as blue.

Given a frequency \(\nu\) and a temperature \(T\), the spectral energy density is given by:

\(u_\nu(\nu,T)=\frac{8\pi h \nu^3}{c^3}\frac{1}{e^{\frac{h \nu}{k_B T}}-1}\)

If a detector has a sensitivity function \(s(\nu)\) for how brightly to display the results of received radiation, the brightness by that detector (for a star of radius \(r\) and distance \(d\) away, \(r << d\)) is:

\(B_s(T,r,d)=\frac{\pi r^2}{d^2}\int_{0}^{\infty}{u_\nu(\nu,T)s(\nu)}d\nu\),

If the detector detects all the radiation close to a frequency \(f\) and not further away, it can be modeled by:

\(s(\nu) = \left\{ \begin{array}{ll} C_s & \mbox{if } \nu > f_s-\frac{w_s}{2} \mbox{ and } \nu < f_s+\frac{w_s}{2} \\ 0 & \mbox{otherwise } \end{array} \right.\)

where \(C_s\) is a constant for translating received energy into displayed brightness.

the energy function becomes:

\(B_s(T,r,d)=C_s\frac{\pi r^2}{d^2}\int_{f_s-\frac{w}{2}}^{f_s+\frac{w}{2}}{u_\nu(\nu,T)}d\nu\)

which appoximates to (for sufficiently small \(w_s\)):

\(B_s(T,r,d)\approx C_s\frac{\pi r^2}{d^2}{u_{f_s}(f_s,T)}w_s=C_s w_s \frac{\pi r^2}{d^2}\frac{8\pi h f_s^3}{c^3}\frac{1}{e^{\frac{h f_s}{k_B T}}-1}\)

There are lot of constants that can be collected:

I define \(a_s=\frac{C_s w_s{8\pi^2 h f_s^3}}{c^3}\) and \(b_s={\frac{h f_s}{k_B}}\), so

\(B_s(T,r,d)\approx \frac{r^2}{d^2}\frac{a_s}{e^{\frac{b_s}{T}}-1}\)

Now I just set up three detectors corresponding to red, green and blue wavelengths (the \(a_r,a_g,a_b,b_r,b_g,b_b\) can be precomputed), and I have:

Physics result

\(i_r = \frac{a_r}{e^{\frac{b_r}{T}}-1}\), \(i_g = \frac{a_g}{e^{\frac{b_g}{T}}-1}\) and \(i_b = \frac{a_b}{e^{\frac{b_b}{T}}-1}\)

The brightness is \(I_m=\frac{r^2}{d^2}\mbox{max}(i_r,i_g,i_b)\), and the color components are just given by

\(c_r=\frac{i_r}{i_m}, c_g=\frac{i_g}{i_m}, c_r=\frac{i_b}{i_m}\), noting that one of them will be 1, the others \(0 \leq c_x. \leq 1\).

Where to calculate this?

Firstly, an important note: what I am about to describe is only used for stars that are far enough to regard as point sources, and also dim enough not to want to render them using a small disk. I have other mechanisms for dealing with close and/or very bright stars.

There are two places available to compute: the CPU and the GPU.

The part involving the distance \(d\) will always have to be done on the GPU because it can only be determined at runtime.

The temperature \(T\) and the radius \(r\) will be unchanging for each star (I’m not dealing with variable stars right now).

The other values \(a_r,a_g,a_b,b_r,b_g,b_b\) only vary if the detector settings change.

It it tempting to try and make this is fast as possible by passing the position, radius \(r\) and temperature \(T\) (actually more efficient to pass position, \(r^2\) and \(T^{-1}\) to avoid some GPU operations) for each star to the GPU and let it handle the calculation, either coding the \(a_r,a_g,a_b,b_r,b_g,b_b\) as constants or putting them in the Unified Buffer Object, but there are two reasons not to:

-

Each star may appear in many thousands of frames, so a calculation that could be done once on the CPU ends up being done thousands of times on the GPU, which may increase power use (I don’t have a good way of measuring this). Remembering that the primary target of this is Android, I want to keep the power use as low as possible - I’m writing a space simulator, not a space heater.

-

if \(b_r\), \(b_g\) or \(b_b\) are very large (say for high \(\nu\) and/or low \(T\)), the \({e^{\frac{b_g}{T}}}\) calculation can overflow. It is possible that the GPU does the right thing and ends up calculating \(\frac{1}{\infty -1}=0\), and it’s even possible that this is done without performance penalty, but that seems like unsafe behavior to rely on that may be GPU specific. Adding in a test (if \(\frac{b_g}{T} > 80\) then result \(=0\)) is even more work for the GPU.

I choose to calculate the luminosity \({r^2}\mbox{max}(i_r,i_g,i_b)\) and color \((c_r,c_g,c_b)\) on the CPU (it is relatively easy to vectorize) and send those values to the GPU. Note that the \(\frac{b_g}{T} > 80\) is still needed. The information fits in 20 bytes and might look something like this (it even has room for the 8 bits of flags):

Packing a star into 16 bytes

When there are going to be arrays of a data structure, and the size of an element is a little over a power of 2, I like to see if I can reduce the size to a power of 2. There are several reasons to consider reducing the size if possible:

-

It takes up less space on the GPU (remember that there might be millions of stars). Even on the low end GPUs that this is designed for, this doesn’t seem to be a big consideration.

-

It takes less time to transfer to the GPU. It turns out that this does matter.

-

The might be places at the host end or GPU end that works more efficiently with aligned data. This is certainly true at the host end and I’ve seen some references to Vulkan preferring to work with multiples of 16 for its data structures, but I don’t think it would affect this.

At first sight, the prospects aren’t promising - the structure fills 160 bits, the goal is 128 bits, so 32 bits have to be removed, and we really don’t want to reduce the precision of the coordinates below 32 bit floats, which already aren’t quite adequate.

There are several options:

-

Stars are tiny, so 8 bits of color per component isn’t really needed (it is difficult to differentiate small color changes in small objects) - 5 or 6 bits per component might be enough, say 6 bits per component for a total of 18 bits, which saves 6 bits.

-

The luminosity doesn’t need to be terribly accurate - a half-precision float (or similar - may want slightly larger exponent at the expense of mantissa) might be enough for that - so that saves about 16 bits.

-

IEEE-754 floats have an 8 bit exponent, but the the exponents I use are all in the range 0..63, so the other 2 bits could theoretically be used for other purposes (the grayed out bits in the diagram). This is getting into the weeds of floating-point formats, and doing a little more bit-bashing than I’m comfortable with, but it does give us 6 bits, although they are not conveniently together.

That’s 28 bits - only 4 more needed. Perhaps go with 5 bit color and store the luminosity in 15 bits to get to 32? This is turning into a lot of work (both to code, and for the GPU), and risks not looking quite as good.

At this point, I go back and look at the x,y,z coordinates, and observe that due to the way that floating point is stored, the accuracy of each coordinate varies depending on how close to the zero it is, and a lot of that accuracy is spurious.

An IEEE-754 single precision floating point number is stored like this:

which represents (mantissa in binary): 1.bbbbbbbbbbbbbbbbbbbbbbb * \(2^\mbox{exp}\) (the leading 1 is implicit).

Assuming that a number was rounded correctly when calculated, the maximum error is:

\(\frac{1}{2} 2^{\mbox{exp}-23}\) = \(2^{\mbox{exp}-24}\)

Consider three stars at the following positions (units don’t matter for this discussion, I imagine parsecs):

| Name | x | y | z |

|---|---|---|---|

| A | 1.16 | 1.16 | 1.16 |

| B | 1.42 | 1.42 | 0.02 |

| C | -2.02 | -0.03 | -0.13 |

Looking at the conversion to 1EEE-764 format (I’m not going to worray about how the exponent is stored):

| value | mantissa | mantissa (binary) | exp | max err | |

|---|---|---|---|---|---|

| \(A_{xyz}\) | 1.16 | 1.16 | (1).00101000111101011100001 | 0 | 0.00000005961 |

| \(B_{xy}\) | 1.42 | 1.42 | (1).01101011100001010001111 | 0 | 0.00000005961 |

| \(B_z\) | 0.02 | 1.28 | (1).01000111101011100001010 | -6 | 0.00000000093 |

| \(C_x\) | -1.01 | 1.01 | (1).00000010100011110101110 | 1 | 0.00000011921 |

| \(C_y\) | -0.03 | 1.92 | (1).11101011100001010001111 | -6 | 0.00000000093 |

| \(C_z\) | -0.13 | 1.04 | (1).00001010001111010111000 | -3 | 0.00000000745 |

The high accuracy of \(B_z, C_y, C_z\) is an artifact of them happening to be close to zero, and is more than required, so I renormalize them to have the same exponent as \(B_x, C_x, C_x\) respectively:

| value | mantissa | mantissa (binary) | exp | max err | |

|---|---|---|---|---|---|

| \(A_{xyz}\) | 1.16 | 1.16 | 1.00101000111101011100001 | 0 | 0.00000005961 |

| \(B_{xy}\) | 1.42 | 1.42 | 1.01101011100001010001111 | 0 | 0.00000005961 |

| \(B_z\) | 0.02 | 1.28 | 0.00000101000111101011100 | 0 | 0.00000005961 |

| \(C_x\) | -2.02 | 1.01 | 1.00000010100011110101110 | 1 | 0.00000011921 |

| \(C_y\) | -0.03 | 0.015 | 0.00000011110101110000101 | 1 | 0.00000011921 |

| \(C_z\) | -0.13 | 0.065 | 0.00010000101000111101100 | 1 | 0.00000011921 |

Note that now that some of the numbers are denormalized, the leading 1 now needs to be made explicit.

The mantissa can now be stored as a fixed point integer in 25 bits. Why 25 when the initial mantissa was 23 bits? One extra bit because the 1 can’t be made implicit, and another because if the sign is included and they are stored as two’s-complement, an extra bit is needed to not lose the range.

| two’s complement mantissa | |

|---|---|

| \(A_{xyz}\) | 01.00101000111101011100001 |

| \(B_{xy}\) | 01.01101011100001010001111 |

| \(B_z\) | 00.00000101000111101011100 |

| \(C_x\) | 10.11111101011100001010010 |

| \(C_y\) | 11.11111100001010001111011 |

| \(C_z\) | 11.11101111010111000010100 |

In general, take the largest exponent of the (x,y,z) components, and denormalize the components to use that exponent.

The advantage of doing this is that now the exponent only needs to be stored per star labelled pexp (still requiring 6 bits), and with the mantissa needing 25 bits, the other 7 bits can be used for the color, which gives this as a possible layout:

If the viewpoint is near the origin, a case can be made for using a 26 bits for the mantissa rather than 25 bits, as the view would be more ‘square on’ to coordinates that have low values. That would mean only 6 bits for each color component, or having to store the extra color bits somewhere else (say reducing the precision of the luminosity a bit).

Here is a slightly simplified version of the GLSL vertex shader that renders the stars. It makes use of ldexp() to construct a float out of a mantissa and binary exponent.

layout(location = 0) in int inx;

layout(location = 1) in int iny;

layout(location = 2) in int inz;

layout(location = 3) in int luminosity_scale;

layout(location = 0) out vec3 fragColor;

void main() {

int scale=int(luminosity_scale&0x3F)-SOME_SCALE_FACTOR;

int posx=inx>>7;

int posy=iny>>7;

int posz=inz>>7;

vec3 position = vec3(ldexp(float(posx), scale),

ldexp(float(posy), scale),

ldexp(float(posz), scale)) - ubo.eye_offset;

float distance_squared = dot(position, position);

uint absolute_luminosity_exponent = luminosity_scale >> 24;

float absolute_luminosity = ldexp(float(((luminosity_scale >> 16) & 0xFF)),

int(absolute_luminosity_exponent));

float luminosity = ubo.luminosity_const * absolute_luminosity / distance_squared;

float point_size = max(0.5, sqrt(luminosity));

luminosity = luminosity / (point_size*point_size);

gl_Position = ubo.proj_model_view * vec4(position, 1.0);

gl_PointSize = point_size;

fragColor = min(luminosity, 1.0) * vec3(inx&0x7F, iny&0x7F, inz&0x7F) * (1.0/127);

}

This could be taken a step further, and instead of having intensity and (r,g,b) stored separately, I could have stored an intensity for red, an intensity for green and an intensity for blue, using the same shared exponent technique. For this variant, it is definitely worth going for the 26 bit mantissa. Storing 9 bits per color component allows the full range of colors to be used in the final result. This gives 11 bits left over for flags (f0 and f1). but that would require a little more work in the GPU.

Some CPU notes

There are a couple of efficiency considerations to consider at the CPU end.

Fast copying of buffers

There are essentially two types of GPU memory that are used for rendering: memory that is host visible, which is typical on integrated GPUs, or memory that is not host visible, which is typical for external GPUs on a separate card, although many external GPUs have a small pool of memory that is also host visible (my GeForce GT 730M does not).

In Vulkan terms, the buffers that the image should be rendered from will have the VK_MEMORY_PROPERTY_DEVICE_LOCAL_BIT set. If theVK_MEMORY_PROPERTY_HOST_VISIBLE_BIT is set, vkMapMemory can be accessed to read/write that memory. Otherwise vkCmdCopyBuffer will be required.

In order to write the new stars to the GPU, they are first put in a staging buffer before being copied to the render buffer. The copying needs to be fast, because the rendering is stalled while that is happening.

Because the ordering of adding and removing stars is different, the places in the render buffer that need to be written out get very fragmented.

There are two cases.

Non host-visible memory

If VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT is not set, then a staging buffer needs to created with VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT, and the process becomes:

Create the staging buffer using vkCreateBuffer+vkAllocateMemory+vkBindBufferMemory (or reuse)

vkMapMemory the staging buffer so it can be written to directly

Write the new star data to the staging buffer

If VK_MEMORY_PROPERTY_HOST_COHERENT_BIT not set on the staging buffer:

Use vkFlushMappedMemoryRanges on the staging buffer

vkUnmapMemory the staging buffer

Within the appropriate Vulkan locks:

Create command buffer with vkCmdCopyBuffer, and run it

Deallocate the staging buffer using vkDestroyBuffer+vkFreeMemory (or mark for reuse)

Host-visible memory

If VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT is set, the process above could be used, but this is much faster:

Allocate the staging buffer in ordinary RAM (or reuse)

Write the new star data to the staging buffer

Within the appropriate Vulkan locks:

vkMapMemory the render buffer so it can be written to directly

Use memory operations to copy from staging buffer to render buffer

If VK_MEMORY_PROPERTY_HOST_COHERENT_BIT not set on the render buffer:

Use vkFlushMappedMemoryRanges on the render buffer

vkUnmapMemory the render buffer

Deallocate the staging buffer (or mark for reuse)

In particular, copying from the staging buffer to the render buffer can be sped up if the alignment is a multiple of 16. Because of the fragmentation of the render buffer, avoiding the memcpy overhead is a large speed up:

if((align&~0xf)==0) {

for(uint32_t i=0;i<size_or_count;i++) {

const VkBufferCopy& vbc=copy_regions[i];

uint8_t* d=dest+vbc.dstOffset;

uint8_t* s=((uint8_t*)src)+vbc.srcOffset;

#if __x86_64 || _M_X64

for(uint32_t j=0;j<vbc.size;j+=16) {

_mm_store_si128((__m128i*)(d+j),_mm_load_si128((__m128i*)(s+j)));

}

#elif __aarch64__

for(uint32_t j=0;j<vbc.size;j+=16) {

vst1q_u8(d+j,vld1q_u8(s+j));

}

#else

for(uint32_t j=0;j<vbc.size;j+=8) {

uint64_t s8=(uint64_t*)(s+j);

uint64_t d8=(uint64_t*)(d+j);

*d8=*s8;

}

#endif

}

} else {

for(uint32_t i=0;i<size_or_count;i++) {

const VkBufferCopy& vbc=copy_regions[i];

memcpy(dest+vbc.dstOffset,((uint8_t*)src)+vbc.srcOffset,vbc.size);

}

}

I don’t have any quantified information, but when I added the optimizations for VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT and alignment, the host visible memory case (on my Intel(R) Core(TM) i5-4340M with integrated Intel® HD Graphics 4600) went from being very jerky (large fractions of a second delay if moving fast through a dense star field) to quite smooth. There was a bug where this path was not used properly, but that is fixed in Galaxy Generator version 0.0.10.

If there is enough interest, I can release the lightweight ManagedBuffer class that I wrote to encapsulate all of this - I’d have to clean up some dependencies before I did that (contact me and ask if you would use something like this).

Possible optimization: direct writes

It might be possible that the use of 16 byte stars in the host-visible memory case allows avoiding the locks altogether.

For the stars to align for this particular optimization, conditions for this would be:

-

That it is allowed to render from memory that is mapped using

vkMapMemory- I’ve read that this is ok, but haven’t tried it yet. -

That 16 bit x86-64/SSE and ARM/NEON writes are atomic - this is required to avoid tearing. It is certainly a single machine code instruction on each architecture, and I’ve read hints that this is true for all but ancient x86-64 CPUs (still looking for a good reference for that), but haven’t found any information for ARM.

-

An alternative approach would be to use one of the flags as a ‘do not render’ bit, so set the bit, write the rest of the star, then clear the bit.

-

Another alternative is that the tearing might be so rare and brief that it doesn’t matter.

-

Another approach in this case is to not even set up a staging buffer (or technically have a staging buffer of size 1), and continuously write the stars as they are generated, although this would also require the VK_MEMORY_PROPERTY_HOST_COHERENT_BIT to be set on the render buffer.

This is an optimization I might consider using in future.

Vectorizing the luminosity/color calculation

Most of this is reasonably straightforward. I use SSE (128 bit) rather than AVX (256 bit), as the code matches more closely with ARM NEON, which is only 128 bit. This makes the coding a little easier, and ARM NEON is the main optimization target anyway.

Also, the exp() function in math.h is not vectorized, so here is a low precision (but good enough) implementation of that, based on something I found on Stack Overflow. If I later decide this is not accurate enough, I would make a more accurate version (by adding terms) using the Remez Algorithm.

#if __x86_64 || _M_X64

inline __m128 fast_exp_sse(__m128 x) {

__m128 l2e=_mm_set1_ps(1.442695041f); /* log2(e) */

__m128 c0=_mm_set1_ps(0.3371894346f);

__m128 c1=_mm_set1_ps(0.657636276f);

__m128 c2=_mm_set1_ps(1.00172476f);

/* exp(x)=2^i * 2^f; i=floor (log2(e) * x), 0 <= f <= 1 */

__m128 t=_mm_mul_ps(x, l2e); /* t=log2(e) * x */

__m128 e=_mm_floor_ps(t); /* floor(t) */

__m128i i=_mm_cvtps_epi32(e); /* (int)floor(t) */

__m128 f=_mm_sub_ps(t, e); /* f=t - floor(t) */

/* p=(c0 * f + c1) * f + c2 ~= 2^f */

__m128 p=_mm_add_ps(_mm_mul_ps(_mm_add_ps(_mm_mul_ps(c0,f),c1),f),c2);

/* r=p * 2^i*/

return _mm_castsi128_ps(_mm_add_epi32(_mm_slli_epi32(i, 23), _mm_castps_si128 (p)));

}

#elif __aarch64__

inline float32x4_t fast_exp_neon(float32x4_t x) {

float32x4_t t=vmulq_n_f32(x,1.442695041f);

int32x4_t i=vcvtmq_s32_f32(t);

float32x4_t f=vsubq_f32(t,vcvtq_f32_s32(i));

float32x4_t c0=vdupq_n_f32(0.3371894346f);

float32x4_t c1=vdupq_n_f32(0.657636276f);

float32x4_t c2=vdupq_n_f32(1.00172476f);

float32x4_t p=vmlaq_f32(c2,f,vmlaq_f32(c1,f,c0));

/* r=p * 2^i*/

return vreinterpretq_f32_s32(vaddq_s32(vshlq_n_s32(i,23),vreinterpretq_s32_f32(p)));

}

#endif

It is hard to measure the performance of the luminosity/color calculation because in my code it is integrated with star generation itself, but it seems to take less than 2% of the total CPU time, and star generation can be done in a separate thread from any other calculation going on. I’m calling that good enough for now.

Conclusion

I set up a model for calculating the luminosity and color of a star using Plank’s Law, using vector instructions for calculating this relatively efficiently on a CPU. A method of denormalization and a shared exponent was used to pack this plus the coordinate data into 16 bytes. Lastly, in the case of GPUs with host-visible memory, there are some optimizations that can be performed to transfer the data efficiently.

The result is what I think is a fairly fast smooth rendering of a changing star field even on low end devices - but feel free to draw your own conclusions.

I welcome any comments, corrections, observations, or improvement suggestions. Please feel free to contact me